A year after social media platforms cracked down on election misinformation following the insurrection at the U.S. Capitol on Jan. 6, false claims of electoral fraud are still prevalent on Twitter and TikTok.

A new report conducted by Advance Democracy, Inc., a nonpartisan nonprofit research organization, found that while explicit calls to violence on social media are not as prevalent as they were prior to Jan. 6, 2021, messages about the election being rigged or stolen still permeate.

Election misinformation and disinformation flourished on social media ahead of the Capitol insurrection, with accounts from the likes of far-right activist Ali Alexander or QAnon celebrity Lin Wood peddling the Big Lie that the election was stolen from former President Donald Trump or calling for violence. Right-wing activists were able to mobilize followers for their so-called Stop the Steal campaign, bringing thousands of Trump supporters and conspiracy theorists to Washington, D.C., to pressure Congress against certifying the election for President Joe Biden. That disinformation campaign culminated in the Capitol insurrection.

After the insurrection, Twitter finally took steps to limit election misinformation and the far-right QAnon conspiracy theory, wiping 70,000 QAnon-related accounts from its platform by mid-January, as well as the former president’s account. TikTok took some steps in the month leading up to the 2020 election and immediately after it, limiting searching abilities on its platform for some terms associated with QAnon and banning some hashtags related to election misinformation.

But election misinformation has persisted. In the year since Jan. 6, two hashtags associated with this type of misinformation—#electionfraud or #voterfraud—were used in some 139,000 Twitter posts.

The top post came from @AZCountryPatri1, an account that was suspended from Twitter after the release of ADI’s report. The September tweet included the hashtags #ElectionFraud, #Dominion, and #ElectionIntegrity and a video of Hillary Clinton, which the account claimed was the former secretary of state explaining “what their plan was” to, presumably, steal the election.

That post got boosted by Jack Posobiec—a far-right activist who supported the so-called Stop the Steal campaign and peddled the Big Lie that the 2020 election was stolen—who retweeted @AZCountryPatri1 with a simple “omg” to his 1.5 million Twitter followers.

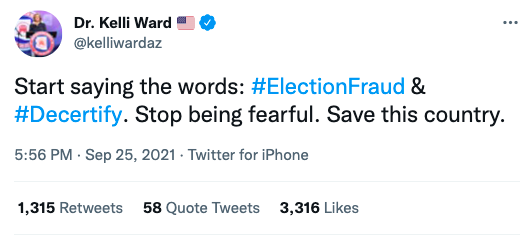

Kelli Ward, chair of the Arizona GOP, was responsible for the third most popular election fraud post, tweeting in September, “Start saying the words: #ElectionFraud & #Decertify. Stop being fearful. Save this country.” That garnered nearly 4,700 engagements. The Texas attorney general, Ken Paxton, was behind another viral tweet alleging voter fraud in Texas.

ADI also gathered data on the hashtags #BidenCheated, which was used in 43,680 posts on Twitter; #StolenElection, used 22,400 times; and #TrumpWon2020, used some 13,000 times.

Those hashtags just give a sampling of the election misinformation still prevalent on Twitter; presumably, many more tweets alleging voter fraud don’t include hashtags.

On TikTok, videos using two hashtags associated with false claims that the 2020 election was stolen from Trump have received 14.7 million views.

As noted earlier, TikTok addressed misinformation on its platform by making certain terms harder to find, removing the searching capabilities for some hashtags associated with election misinformation. Hashtags like #riggedelection, #sharpiegate, #electionfraud, and #voterfraud are no longer searchable on its platform, but users have created other hashtags to spread the lie that Trump won the 2020 election.

The ADI report looked specifically at the use of the hashtags #TrumpWon2020 and #ItsNotOverTrump2020, which received 3.6 million views and 11.1 million views respectively. (After the release of ADI’s report, TikTok made the #TrumpWon2020 hashtag no longer searchable.)

One video on TikTok promoted election conspiracy theorist Mike Lindell’s “documentary,” which featured QAnon celebrity Mike Flynn and purported to display evidence of election fraud, along with the hashtags #ElectionInterference and #TrumpWon2020. It racked up nearly 9,000 views.

Another video posted this past August, which used the hashtag #TrumpWon2020, #wethepeoplearepissed, and #standup, featured an audio recording of Trump saying, “If you fuck around with us, if you do something bad to us, we are going to do things to you that have never been done before.” The video featured text that read, “ENOUGH! PATRIOTS, TIME TO STAND!” Below that was an image of a bald eagle giving the middle finger and the caption “Jihad this!” while a Trump supporter nodded in agreement to the audio of Trump. As of January, this video had received more than 6,700 views and over 1,600 likes.

By cracking down on some accounts peddling disinformation and conspiracy theories, Twitter and TikTok have been able to somewhat limit the spread of election disinformation. QAnon-related accounts, for example, were responsible for 1 in 20 tweets about the election; removing 70,000 accounts that often amplify election misinformation and conspiracies is a good first step.

But with the 2022 midterm elections approaching, the Republican Party set on making it harder to vote in the name of preventing phantom voter fraud, and right-wing activists launching attacks on local election officials, disinformation experts are concerned about social media platforms readiness to address new election disinformation before it culminates in another event like Jan. 6.

Related posts:

Views: 0

RSS Feed

RSS Feed

January 12th, 2022

January 12th, 2022  Awake Goy

Awake Goy  Posted in

Posted in  Tags:

Tags: